Multi-Object Tracking , (MOT) is an important research area in computer vision. The task is to simultaneously detect and associate multiple moving objects in consecutive video frames, outputting each object's unique ID and trajectory over time. The challenge lies in solving the closed-loop "detection-association" problem.

In practical applications, MOT methods often encounter low-feature environments, such as homogeneous objects, texture loss, insufficient lighting, and object occlusion. These problems can lead to detection failures and the inability to associate and reconstruct trajectories.

![]()

To address these challenges, visual metrology engineers have been continuously optimizing and improving traditional MOT methods in recent years.

Path 1: Deep Learning Feature Enhancement MOT. This approach leverages deep neural networks to automatically extract deep features of targets. It then combines this with a long short-term memory network model to jointly model the target's trajectory and appearance, enabling multi-target tracking. This approach offers the advantage of automatically processing large amounts of data and learning useful features, making it suitable for multi-target tracking tasks in some low-feature environments. However, the model's generalization capability is limited, and it cannot effectively detect objects when the training samples differ from the actual application scenario.

Path 2: Generative Feature Enhancement MOT. This approach leverages generative models to enhance the visual features of detected targets. For example, it generates new images that resemble the actual target but with different perspectives and lighting conditions. The generated features are then combined with the original features for subsequent target matching and trajectory association. This approach addresses the challenges of multi-target tracking in low-feature environments to some extent, but it faces challenges such as high computational cost, sensitivity to the quality of the generated model, and real-time performance.

Based on the theoretical premise that "3D motion continuity" of detected targets is more invariant than "2D appearance differences," Revealer algorithm engineers, combined with high-speed camera technology, developed a "Motion On Time (MOT) for Low-Feature Scenes Based on Spatial Clustering." Through four technical steps: "geometric reconstruction → spatiotemporal clustering → physical verification → trajectory error correction," Revealer addresses missing features at the source of the data, avoiding the feature dependency of "deep learning feature-enhanced MOT" and "generative feature-enhanced MOT." Furthermore, the lightweight spatial clustering and projection operations ensure computational efficiency and real-time performance, achieving a seamless closed-loop detection-association loop in low-feature scenes.

Revealer achieves breakthroughs in four key technical areas, focusing on detection paths and association paths:

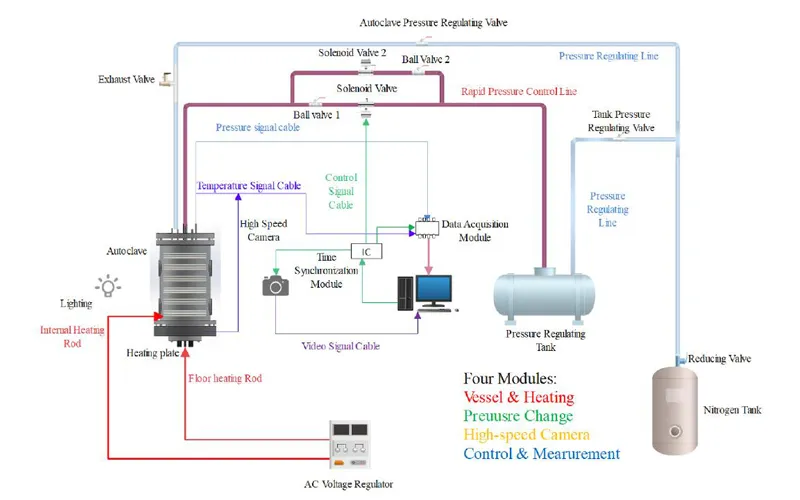

Geometric Reconstruction: Dual-view matching and 3D reconstruction based on epipolar constraints. This involves calculating the fundamental matrix F using the intrinsic and extrinsic parameters of the left and right high-speed cameras. Matching point pairs are then selected using epipolar geometric constraints. Finally, binocular geometric triangulation is used to reconstruct a 3D point cloud, including noise and outliers.

![]()

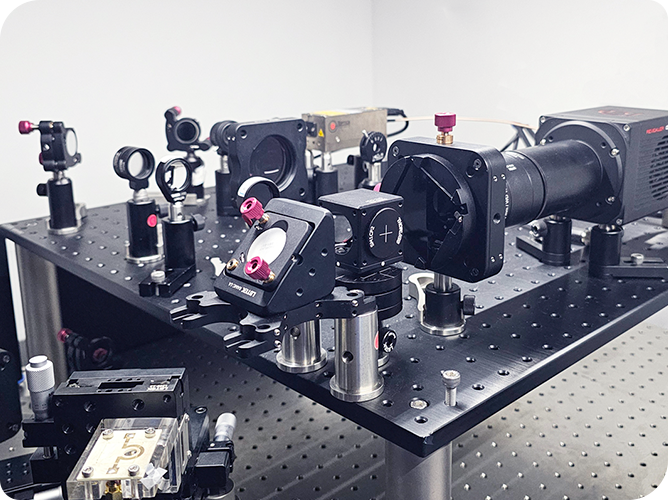

Spatiotemporal clustering: 3D point positions in multiple frames are tracked to construct 3D trajectories. The 3D points are then clustered based on spatial consistency and frame temporal continuity. Points with close distances and consistent change trends are merged to form continuous trajectories. Short trajectories that appear less than a threshold in consecutive frames are deleted to eliminate unstable or occasional noise points, resulting in a preliminary screened set of valid 3D trajectories.

![]()

Physical verification: Reproject the 3D trajectory onto the left and right high-speed camera image planes, compare the projected trajectories on the left and right images, and remove duplicate matching trajectories.

![]()

Trajectory error correction and reconnection: By fitting a three-dimensional quadratic curve to the trajectory and calculating the error between the actual trajectory points and the fitted curve, abnormal intervals exceeding the error threshold are marked and the abnormal trajectory segments are disconnected. The similarity is then calculated based on the spatial position and temporal continuity of the trajectory segments. The trajectory segments that meet the conditions are reconnected and smoothed to restore the complete continuous trajectory.

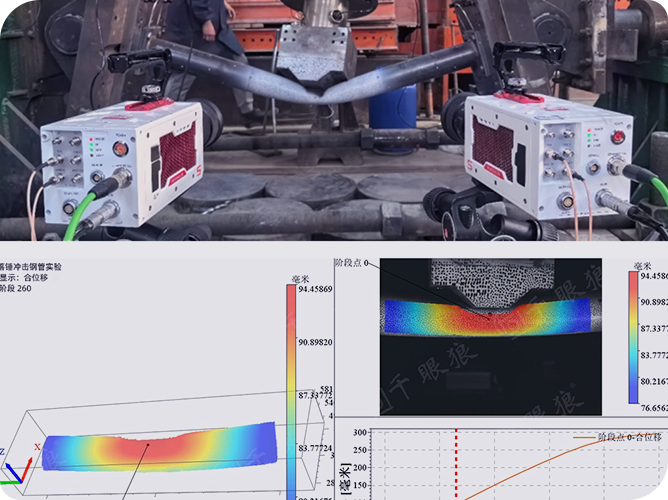

![]()

In a drop experiment, hundreds of fast-moving black balls were tracked. Because the black balls' monochromatic surface color lacked distinct texture features, representing a typical low-feature scene, accurate positional information was obtained using spatial clustering MOT geometric reconstruction technology. 2D projection was used to eliminate one-to-many mismatches, improving the uniqueness and accuracy of the trajectories. Trajectory fitting analysis identified and corrected erroneous track connections, enhancing the stability and reliability of the tracking results.

![]()

![]()

Revealer's "Motion On-Track (MOT) technology based on spatial clustering" effectively addresses the challenge of target tracking in low-feature environments by combining dual-view matching with 3D reconstruction. It utilizes epipolar constraints to select matching point pairs, obtains accurate position information through 3D reconstruction, and optimizes trajectories using spatial clustering, reducing mismatches and trajectory breakage. Revealer boasts strong noise immunity and trajectory error correction mechanisms, significantly improving tracking accuracy and stability. In the future, Revealer will enhance real-time performance through algorithm optimization, further improving detection and association efficiency in high-speed target tracking scenarios.